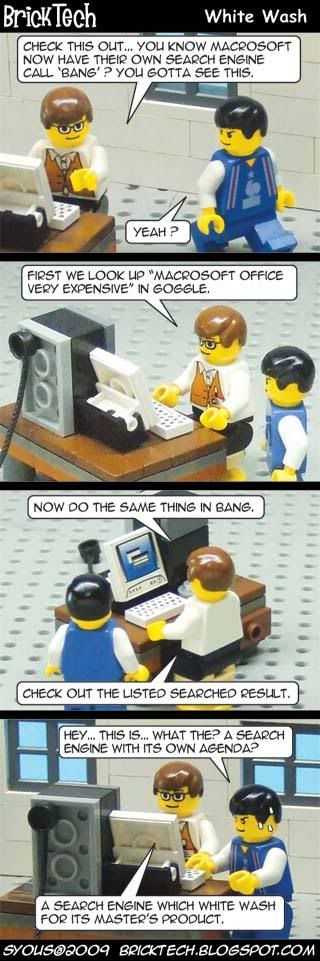

If you don't already know by now, the next standard in gaming graphics is DirectX 11. This is found in Microsoft's upcoming Windows operating system, Windows 6.1, or more popularly known as Windows 7 (not because 6+1=7 or because Windows Vista was Windows 6.0, but because Microsoft says this is the seventh release of Windows).

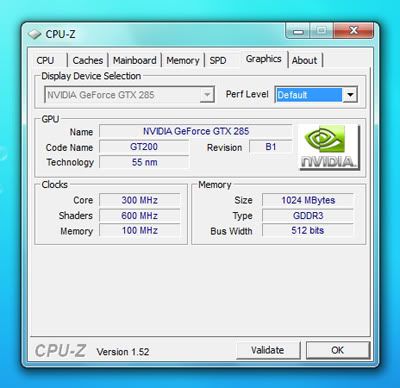

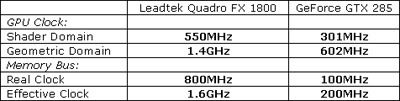

DX11 adds some new features and one of them is Direct Compute, using the new Compute Shader element, which adds to the previous Geometry Shader which was introduced in DirectX 10 (Unified Shader Model 4) on Vista, and the Pixel and Vertex Shaders, which were introduced in DirectX 9 (Shader Model 3) prior to that in Windows XP.

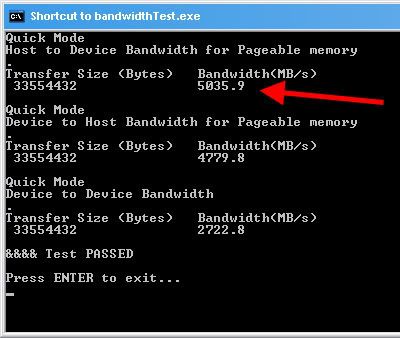

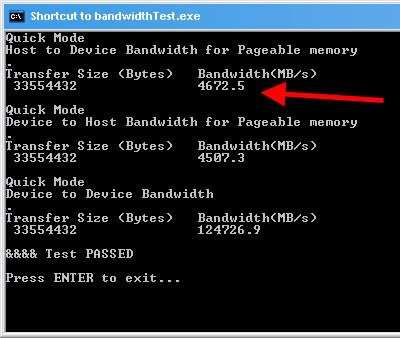

Direct Compute is an implementation of GPGPU technology. Basically it uses your graphic card for processing instead of just your processor, thereby giving you extra performance. This can also be used for 3D Ray-Tracing and Video Transcoding. But did you know DX11 can work with DX10?

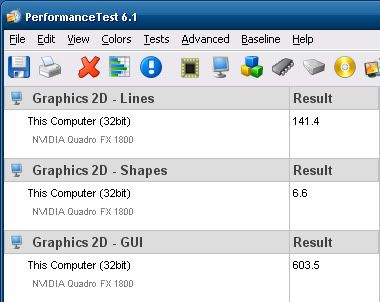

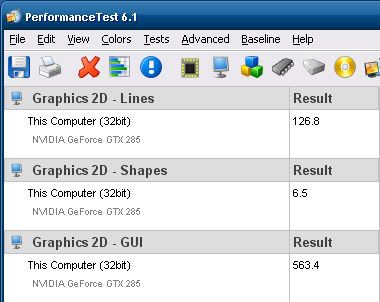

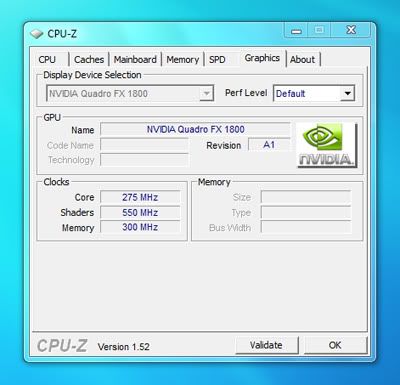

Unlike DX10 which required either AMD's ATI Radeon HD2000/3000/4000 series, or NVIDIA GeForce 8/9/200 series of DX10 gaming cards, DX11 can function on DX10 gaming cards as well, albeit not as fully as on DX11 gaming cards, which is the ATI Radeon HD5000 series, or NVIDIA GeForce 300 series (which isn't out yet).

As expected, this means DX11 can also work on Vista, and it can, when Microsoft releases the Vista Platform Update, but like the above DX11 and DX10 compatibility, you won't get the full features as compared to a pure DX11 platform, which is Windows 7 with DX11 gaming card, and in this case, only ATI's HD5000 is out. With this, coupled with Windows 7, you'll get the full DX11 deal. See here for a list of upcoming DX11 games.

Of course, with DX11 you can also play DX10 games, and you can see a list of DX10 games here - but remember to update your DX files! If you haven't done so, you can get it for free from Microsoft's site here (100MB). Even if you're using Windows XP with DirectX 9, it will update your files to the latest DX9. This might help if you're having problems running some games.

More Data?